NLPIR SEMINAR 23rd ISSUE COMPLETED

Last Monday, Zhaoyou Liu gave a presentation about the paper, Pay Less Attention with Lightweight and Dynamic Convolutions, and shared some opinion on it.

This paper was published as a conference paper at ICLR 2019.

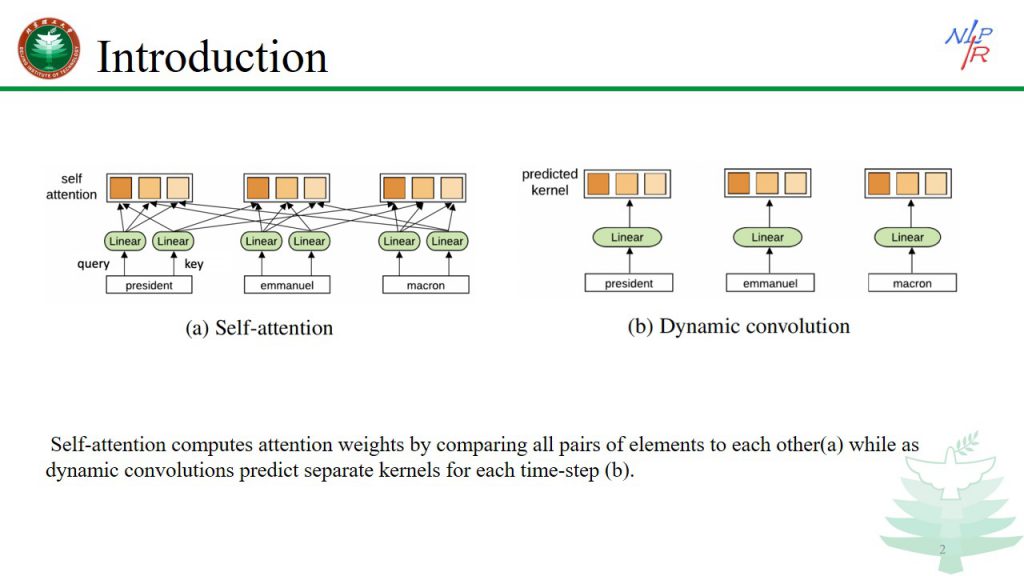

Dynamic convolutions build on lightweight convolutions. The kernel is a function of the current time-step only as opposed to the entire context as in self-attention. This approach shares similarity to location-based attention which does not access the context to determine attention weights.

And the experiments show that dynamic convolutions perform as well as or better than self-attention with less time.

Pages: 1 2